Two development firms in Big data and Analytics space; DATA -Tableau and Hortonworks saw a critical time when their data released missed the forecast by 0.05$, and dropped their stock by five percent. This is what going frequently with companies and no one has any clue on what is going on with BI business Intelligent and Hadoop space. Should companies run from BI and Big data space before it completely collapses?

Instead of focusing on the sensational headlines; the investors and technology corporate leaders should also focus on the missed forecasts as they leave some clue on some important analysis and trends which will help them to grow.

While the companies need to look at their results in the context of the industry as a whole; which will show the exact results as per the worldwide analysis. As per the Gartner’s analysis for worldwide dollar-valued IT; it says that IT spending has grown in 2016 at a flat percent of 0.0. However, 35% of growth is fairly incomparable by this benchmark, and if we look at Hortonworks’ results for this gone quarter: then the total revenue grew by 46% year over a year.

This means, the Investors’ expectations are growing high and even they are tough to manage. To manage this kind of issues the industry observers and technology buyers should standardize the performance of both organizations against the rest of the industry before they make a knowledgeable conclusion.

As per recent survey —Teradata also reported revenue and its business shrink by 4% Year over a year. Leaving other things remaining equal, the analysis says that Hortonworks could generate more revenue than Teradata by 2020.

Let’s look on some of the data analysis pitfalls you should avoid before you are sucked in.

Confirmation Preference

If you have a proposed explanation in your mind; but you are only looking for the data patterns that support it and ignore all data points that reject It. Then let us see what will happen.

First, analyze the results of that particular patterns performed well and find the conversion rate on the landing page. This will help you to really perform high than the average you think. By doing or following such analysis you can use that as the sole data point to prove your explanation. While, completely ignoring the fact of those leads will qualify or the traffic to the landing page will be sub-par.

There is again a thumb rule which is very important to remember, you should never approach data exploration with a precise conclusion in mind; as most of the professional data analysis methods are built in a way that you can try them before you actually go and reject your proposed explanation without proving it or to reject it to the void.

Correlation Vs Cause

Combining the cause of a fact with correlation somewhat will not show any action. While, when one action causes another, then they are most certainly correlated. However, just because two things occur together doesn’t mean that one caused the other, even when it seems to make some sense.

You might find a high positive association between high website traffic and high revenue; however, it doesn’t mean that high website traffic will be the only cause for high revenue. There might be an indirect or a common cause to both that may help to generate high revenue more likely to occur when high website traffic occurs.

For example, if you find a high association between the number of leads and number of opportunities from a classic B2B data quest, then you might gather a high volume of leads with a high number of opportunities.

Here are some more things that you need to watch when doing data analysis:

• Do not compare unrelated data sets or data points and conclude relationships or similarities.

• Analyze incomplete or “poor” data sets and make proper decisions based on the final analysis of that data.

• Do not analyze the data sets without considering other data points that might be critical for the analysis.

• The act of grouping data points collectively and treating them as one. Which means, looking at various visits to your website and creating unique visits and total visits as one and inflating the actual number of visitors and converting it to the best conversion rate.

• Do not ignore any simple mistakes and oversights which may happen anytime.

...

Read More

WebSphere Application Server is a software product which helps to perform the role of a web application server. WebSphere is a flagship product and a set of Java-based tools by IBM which allows customers to create and manage sophisticated business Web sites. It was originally created by Donald F. Ferguson, who later became CTO of Software for Dell and the first version was launched in 1998.

While the central WebSphere tool is known as WebSphere Application Server (WAS). WAS is an application server that helps the customer to connect Web site users with Servlets or Java applications. A Servlet is a Java program that runs on the server rather than on the user's application as Java applets do. Servlets are developed to replace the traditional common gateway interface (CGI) scripts. These scripts are usually written in C language, Practical Extraction or Reporting Language to run much faster and to control all other user requests that run in the same process space.

In addition to Java, WebSphere also supports the open standard interfaces such as Common Object Request Broker Architecture (CORBA) and Java Database Connectivity — JDBC. WebSphere is designed to use across different operating system platforms. You have an option of selecting the version suitable for your business purpose, as one edition of WebSphere will be offered for small to medium size businesses and another edition is for larger businesses with a huge number of transactions. It also has a Studio, which is a developer's environment added with additional components which allow creating web pages’ for a website and you can also the web pages accordingly. Both the versions support Solaris, Windows NT, OS/2, OS/390, and AIX operating systems.

While the WebSphere Studio includes a copy of the Apache Web server; which helps the developers to test Web pages and Java applications at any given time.

What is WebSphere?

The moment you ask this question, the first thing that strikes in your mind is WebSphere is an application server; but in reality, WebSphere is the name of the product in IBM family. IBM has many more products under the brand name WebSphere, here are some of them WebSphere Application Server, WebSphere MQ, WebSphere Message broker, WebSphere process Server, WebSphere business modeler, WebSphere business monitor, WebSphere integration developer, WebSphere partner gateway.

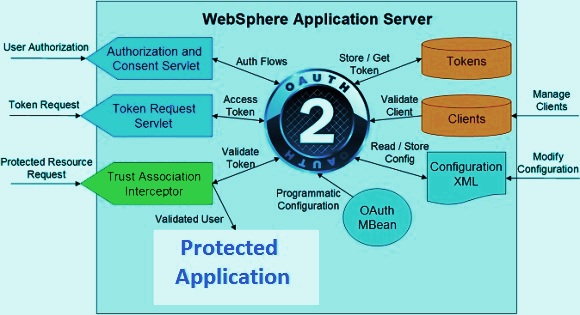

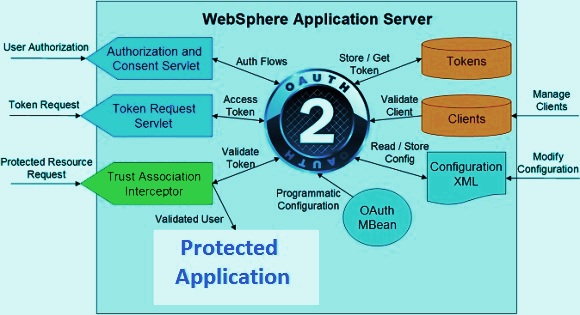

WebSphere Application Server Architecture

WAS is built using Open standards such as Java EE, XML, and Web Services. WebSphere application is supported on the following platforms; Linux, Windows, AIX, Solaris, IBM i and z/OS. It was started with Version 6.1 and the latest version is Version 8.5. While the open and standard specifications are aligned across all the platforms. Platform utilization is to an extent it takes place, but now it is done below across all open standard specifications line.

Security

The WebSphere Application Server security model is based on the services provided by the operating system and as per the Java EE security model. WebSphere Application Server provides implementations of user authentication and authorization mechanisms given that support for various user registries:

• Local operating system user registry

• LDAP user registry

• Federated user registry (version 6.1)

• Custom user registry

The authentication mechanisms supported by WebSphere are

...

Read More

The biggest question roaming around the industry is why one should go permanent rather than temporary? Well, there are many cons and pros defending the question; more to it the people in the room think that they may earn more with permanent positions along with many benefits. In some instances, this may be in another case. You have to look at the amount of work over a whole year and the bigger picture. While this might not always the best thing to jump into.

Taking a good break and moving in the right direction and up the ladder will definitely bring good progress in your career. This is what you see a lot in senior and permanent employees; who actually earn almost as much as they can from their professional and personal experience. More to salary; you will also entitle to have the below-mentioned privileges’ which include:

Here are few pros and cons of Temp Vs Perm

Temporary

Transparency & Flexibility

Most essential reasons most candidates look before they step in any organization is transparency and flexibility. Both of the reasons are cited by many candidates who are looking for Temp positions. The length of the projects they will be put in will give the exact picture about the time frame they will stay in the temporary project; but in addition, there are plenty of options like project contract extensions, holiday’s, flexibility in the working hours and more are attracting the jobseekers to opt for temp positions. Temp work is always a good option for those who want a bit of freedom and flexibility in their role.

Financial Opening

Temp work is not just about flexibility and transparency, but the financial opportunities for temp workers are high and potentially more compared to the permanent employees; even the employers don’t need to be anxious about sick pay, holidays or pensions, and paying cash free for daily rate while as in permanent positions.

Diversity & Practice

Many candidates like to opt for temp projects as they have diverse opportunities to go with and they can easily plan their lifestyle along with choosing the right fit role with good exposure. There are fewer politics to worry about and no need to think about the benefits that come of gaining experience with diverse employers. Here you will have ample of opportunities to pick and work with.

Employer’s Choice

The best reasons that the contract market continues to thrive today is - it’s great for employers too; they have a choice to pick the employee with their required requirements. With restrictions in their place, the hiring process for full-time is tougher than the temp positions. Filling the requirement for a certain period is manageable by offering good package and benefits. However, for many employers, the contract can be a good alternative; as contractors often find themselves in high demand.

Permanent

Association & Safety

Today if we see, many employees are looking for these two reasons which are associated with their jobs and provide them the safety they are looking in for. Close association and safety are two essential things for the employees in today’s work atmosphere. Both need to be closely associated with any kind of work you are going with. Most of the employees like to pick permanent jobs for good association and safety. Whether they’re actual or apparent these kinds of benefits are more usually associated with permanent roles than temp positions.

Getting Further

A permanent position is more defined than temp and often offer strong career path than contract roles with clear opportunities towards the advancement within the organization. Moreover, the permanent employees are more likely to make a choice on the rewards of their hard work on a project than temp, who may previously be on their next project when the approvals are handed out.

Secured Pay

While choosing the temp role can add big numbers in your pay and make your financial map high. However, a guaranteed pay cheque by the end of each month is a huge incentive for permanent employees, along with the benefits that come within – holiday pay, sick pay and any other bonuses the organization might offer.

Know where you like to land

Though flexibility, diversity, financial stability, are great for some employees, but what others are thinking to opt is what we need to prefer to know and where they are based and what are they doing on a daily basis. However, the permanent roles tend to offer to more locked-down employment practice.

What to opt?

While it is impossible to predict whether a temp or perm, obviously, there’s no ultimate answer to this question - the right answer is the one – that right fits for you.

But whichever side you like to choose, we will help you fit in that role as we being the best recruitment partners for many years now.

...

Read More

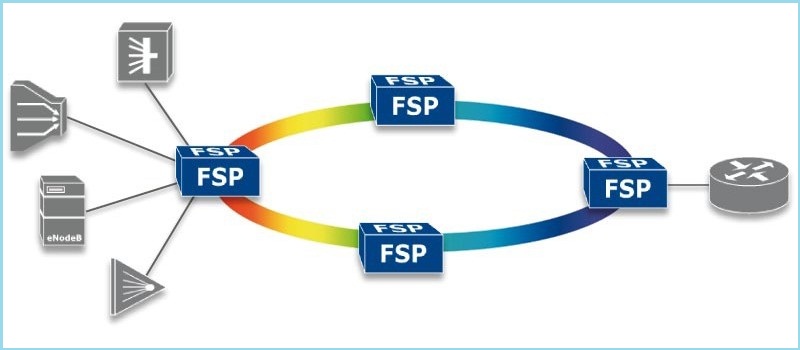

Digitalization, digital networking, research, network automation, education and educational networks; are all these the buzz words of recent technology and for education institutions? Why everyone is talking about digitalization and networks, how are these both interconnected; and what is network automation and education networks are what we are going to know on this blog today .

While what the smaller research say about the education networks and individual campus networks; we can say that both stand for the same aim which is implementing education through current network automation strategies and tools. Are these tools, strategies, and significant efficiencies or the network automation wave more hype than these smaller institutions?

Let us look at some of the regional networks, how they are succeeded and what are the challenges involved in this path of deploying various network automation with relatively small and outsourced staff and support. Also discussed will be the experience of working with this diverse technology and how it increased the awareness about the possible proposed value of network automation and how regional’s can assist smaller schools through this network path.

Digital learning and collaboration have become an essential part of today’s education. From k-12 schools are implementing the methods of digital learning. These blended learning methods and one to one computing programs are reshaping the classroom studies and engaging more students to become tech-savvy’s. The higher educational institutions are announcing BYOD- which means bring your own device concept; students are also allowed to use their smart phones, laptops, tablets and other gadgets to be connected. This helps the institutions to meet the unprecedented demand for connectivity with high performance and to get recognized as highly reliable campus and data network center solution provider.

Challenges:

The hike of latest technologies, mobile devices and growing appetite for applications and rising security concerns are placing new burdens on educational networks. To meet these challenges, schools are expanding their networks to meet student and faculty expectations for high performance, highly reliable, and always-on connectivity.

The school network is always mission-critical, and downtime will be more and un-tolerable when class lectures, research projects, assignments are involved. We can see diversity and richness using these educational applications as learning is increasingly leveraging interactive curricula, collaboration tools, streaming media, and digital media. The success of the Common Core Assessments hugely depends on connectivity. The higher education, universities, and colleges that have poor quality, non-ubiquitous network access, quickly discover that this is affecting their registration/enrollment rates.

The other challenge is with the number of Wi-Fi devices and the types of devices students bring to a university or college campus; Students commonly have three or more devices like; smartphone, tablet, laptop, gaming device, or streaming media player and expect flawless connectivity. While higher education is deploying wireless IP phones for better communications, IP video cameras to develop physical security, and sensors for a more efficient environment. The projections for the Internet of Things, which will connect hundreds of billions of devices in a few short years, are nothing short of staggering.

The trends that educational networks are looking today for students, faculties and administrator expectations is that of for the connectivity which is rising and the complexity and cost of networking are also growing exponentially. In addition to this growth, the “adding on” to networking equipment for old designs is causing the network to become ever more fragile. IT budgets are tight, and technology necessities are growing faster than the funding.

What if schools took a step back and had a unique opportunity to proactively aim their networks to meet the challenges which hit today and tomorrow? What if deploying the network was simple, not a manual, time-consuming chore? What if your initial design and build could scale for years to come, without having to build configurations on the fly every time? What if networks were easier to plan and build, configure and deploy, visualize and monitor—and had automated troubleshooting and broad reporting?

The tools which are of high configurations help the educational networks are Juniper Networks, HP, CODE42, DLT, EKINOPS, Level 3, cloudyCluster, OSI and much more. It’s time to update the educational institutions towards the design, building, and maintaining the network and taking the advantage of automation and modern management tools to create scale, consistency, and efficiency.

...

Read More

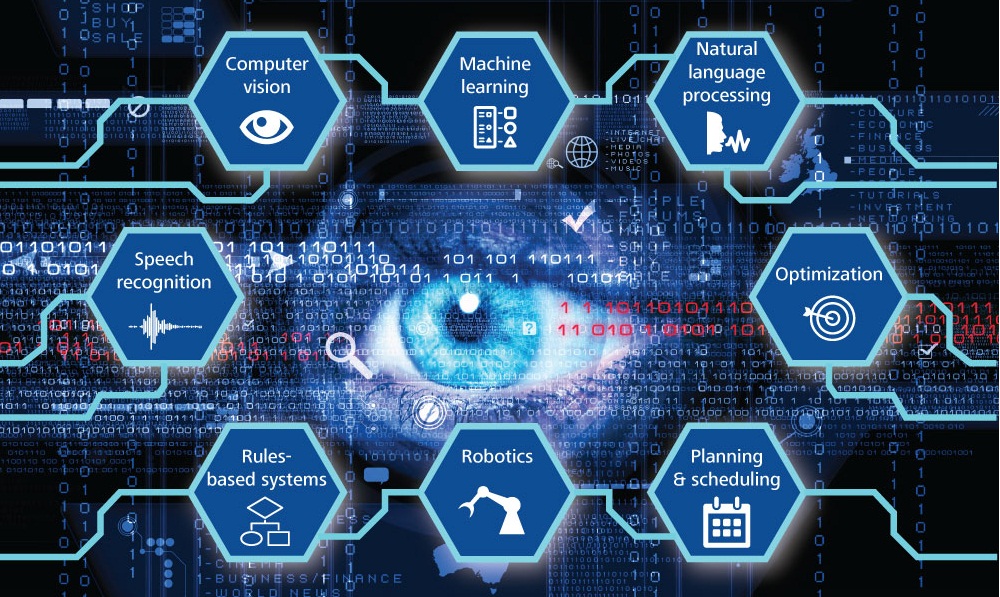

Artificial intelligence has been spread over a very large area of computing since the beginning of the computer, but we are getting closer than ever with cognitive computing models. What are these models and why are we talking about it today.

Cognitive computing comes from a mash-up of cognitive science which is the study of the human brain and how it functions and a touch of computer science and the results will have far-reaching impacts on our private lives, healthcare, business, and more.

What is Cognitive Computing?

The aim of cognitive computing is to replicate human thought processes in a programmed model. It describes technology platforms that broadly speaking, and which are involved in self-learning systems that use data mining, natural language processing, and pattern recognition to mimic the way the human brain works. With the goal to automate the IT systems that are capable of solving the problems without requiring human assistance the Cognitive computing is growing very fast.

Cognitive computing systems use machine learning algorithms; which repeatedly acquire knowledge from the data fed into them by mining data for information. These systems treat the way they look for patterns and as well as the way they process data so they have become competent of anticipating new problems and modeling possible solutions.

Cognitive computing is used in numerous artificial intelligence applications (AI), including expert systems, neural networks, natural language programming, robotics and virtual reality. While computers are proved the faster machines at calculations and meting out the humans for decades; these machines haven’t been able to accomplish some tasks that humans take for granted as simple, like understanding natural language, or recognizing unique objects in an image. The cognitive computing represents the third era of computing: it from computers that could tabulate sums (the 1900s) to programmable systems (1950s), and now to cognitive systems.

The cognitive systems; most remarkably IBM and IBM +0.55%’s Watson, depend on deep learning algorithms and neural networks to process the information by comparing it to an education set of data. The more data the systems are exposed to, the more it learns, and the more accurate it becomes over time, and this type of neural network is a complex “tree” of decisions the computer can make to arrive at an answer.

What can cognitive computing do?

As per the recent TED Talk from IBM, Watson could ultimately be applied in a healthcare setting also, this helps the administrative department of healthcare to collate the span of knowledge around conditions, which include the patient history, journal articles, best practices, diagnostic tools, and many more. Through this, you can easily analyze that vast quantity of information, and provide your recommendations as needed.

The next stage is to examine, which will be proceeded by the consulting doctor, who will then be able to look at the evidence and based on the recorded evidence the treatment options will be released based on these large number of factors including the individual patient’s presentation and history. Hopefully, this will lead to making better treatment decisions.

While in other scenarios, when the goal is not to clear and you look to replace the doctor, and the doctor’s capabilities by processing the humongous amount of data available will not be retained by any human and thus providing a summary of potential application will be overdue. This type of process could be done for any field such as including finance, law, and education in which large quantities of complex data will be in need to be processed and analyzed to solve problems.

However, you can also apply these systems in other areas of business like consumer behavior analysis, personal shopping bots, travel agents, tutors, customer support bots, security, and diagnostics. We see that there are personal digital assistants available nowadays in our personal phones and computers like —Siri and Google GOOGL -0.21% among others, which are not true cognitive systems; and have a pre-programmed set of responses and can only respond to a preset number of requests. But, as tech is on high volume we will be able to address our phones, our computers, our cars, our smart houses and get a real time in the near future when thoughtful response rather than a pre-programmed one.

The coming future will be more delightful for us as computers will become more like humans and they will also expand our capabilities and knowledge. Just be ready to welcome the coming era when computers can enhance human knowledge and ingenuity in entirely new ways.

...

Read More